SPE-54: Keyword Spotting

Unified Speculation, Detection, And, Verification Keyword Spotting

Geng-shen Fu, Thibaud Senechal, Aaron Challenner, Tao Zhang, Amazon Alexa Science

Problem

- Accurate and timely recognition of the trigger keyword is vital.

- There is a trade-off needed between accuracy and latency.

Proposed method

- We propose an CRNN-based unified speculation, detection, and verification keyword detection model.

- We propose a latency- aware max-pooling loss, and show empirically that it teaches a model to maximize accuracy under the latency constraint.

- A USDV model can be trained in a MTL fashion and achieves different accuracy and latency trade-off across these three tasks.

1. Unified speculation, detection, and verification model

- Speculation makes an early decision, which can be used to give a head-start to downstream processes on the device.

- Detection mimics the traditional keyword trigger task and gives a more accurate decision by observing the full keyword context.

- Verification verifies previous decision by observing even more audio after the keyword span.

2. Model architecture and training strategy

- CRNN architecture

- multi-task learning with different target latencies on the new proposed latency-aware max-pooling loss.

Temporal Early Exiting for Streaming Speech Commands Recognition

Comcast Applied AI, University of Waterloo

Problem

Voice queries to take time to process:

Stage 1: The user is speaking (seconds).

Stage 2: Finish ASR transcription (~50ms).

Stage 3: Information retrieval (~500ms).

Proposed method

- Use a streaming speech commands model for the top-K voice queries.

- Apply some training objective for better early exiting across time; Return a prediction before the entire audio is observed.

- Use early exiting with some con dence threshold to adjust the latency-accuracy trade-off.

Model

- GRU Model

- Per-frame output probability distribution over K commands (classes).

Early-Exiting Objectives

Connectionist temporal classi cation (CTC):

Last-frame cross entropy (LF):

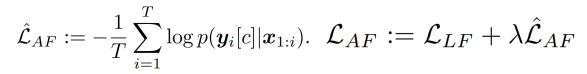

All-frame cross entropy (AF):

Findings

1. The all-frame objective (AF) performs best, perhaps because it explicitly trains the hidden features to be more discriminative, similar to deep supervision [1].

2. The observed indices correlate with the optimal indices for all models and datasets, with the AF-0.5 model consistently exiting earlier than the LF one does.