This paper presents a new model, SpeechUT, which aims to bridge the gap between speech and text representations in the context of pre-training for speech-to-text tasks.

Key Takeways:

- Tasks: SpeechUT incorporates three unsupervised pre-training tasks: speech-to-unit (S2U), masked unit modeling (MUM), and unit-to-text (U2T). These tasks help to learn better representations for the speech and text modalities.

- Architecture: SpeechUT comprises a speech encoder, unit encoder, and text decoder, along with speech and unit pre-nets to process the inputs.

- Unified-Modal Speech-Unit-Text Pre-training Model (SpeechUT): The proposed model is designed to connect the representations of speech and text through a shared unit encoder. It allows for pre-training with unpaired speech and text data, which can be beneficial for tasks like automatic speech recognition (ASR) and speech translation (ST). SpeechUT is a new pre-training method using hidden-unit representations to connect speech encoders and text decoders.

- Discrete Representation (Units): SpeechUT leverages hidden-unit representations as an interface to align speech and text. This is done by decomposing the speech-to-text model into a speech-to-unit model and a unit-to-text model, which can be pre-trained separately with large amounts of unpaired data. The model uses discrete unit sequences produced by off-line generators, allowing for the pre-training of large-scale unpaired speech and text.

- Embedding Mixing: An embedding mixing mechanism is introduced to better align speech and unit representations.

- Pre-Training and Fine-Tuning Methods: The paper describes how SpeechUT is pre-trained with the mentioned tasks and fine-tuned for specific ASR and ST tasks.

- Pre-Training Tasks: SpeechUT includes three unsupervised pre-training tasks: speech-to-unit, masked unit modeling, and unit-to-text.

- Fine-Tuning: For downstream tasks like ASR and ST, SpeechUT is fine-tuned without introducing new parameters, utilizing the pre-trained modules.

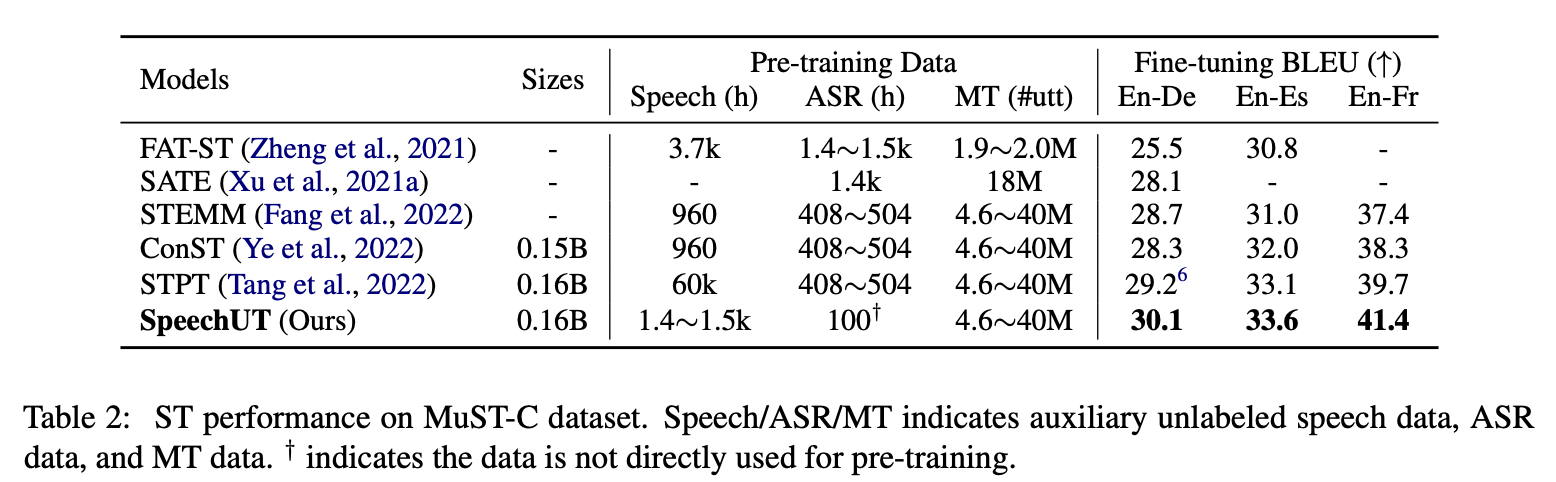

- Performance: The paper reports that SpeechUT achieves substantial improvements over strong baselines and sets new state-of-the-art performance on the LibriSpeech ASR and MuST-C ST benchmarks.

- Detailed Analyses: The paper includes detailed analyses to understand the proposed SpeechUT model better, and the code and pre-trained models are made available for the community.

'Speech Signal Processing > Research' 카테고리의 다른 글

| [SSL] BEST-RQ Pre-Training (0) | 2024.09.05 |

|---|---|

| [ASR/ST/PT/ACL22] Unified Speech-Text Pre-training for Speech Translation and Recognition (0) | 2023.11.18 |

| ICASSP 2022 | Keyword Spotting (0) | 2022.05.20 |

| ICASSP 2022 | SSL for Speech and Audio Processing I (0) | 2022.05.07 |

| ICASSP 2022 | Language Modeling (0) | 2022.05.07 |